Published Jun 27, 2019

Is Our AI on the Way to Becoming Control?

The powerful computer easily took control on 'Discovery,' and in some ways, that's not so far-fetched.

StarTrek.com

Star Trek’s stories are infused with myriad, complex technologies — many of which can’t easily be explained even by engineers. (How do the transporters really work?) Trek’s fictional future is one not so hard to imagine, as we, too, are already surrounded by smartphones and computer software designed to learn and evolve beyond their original programming. But what if they break down or learn too much?

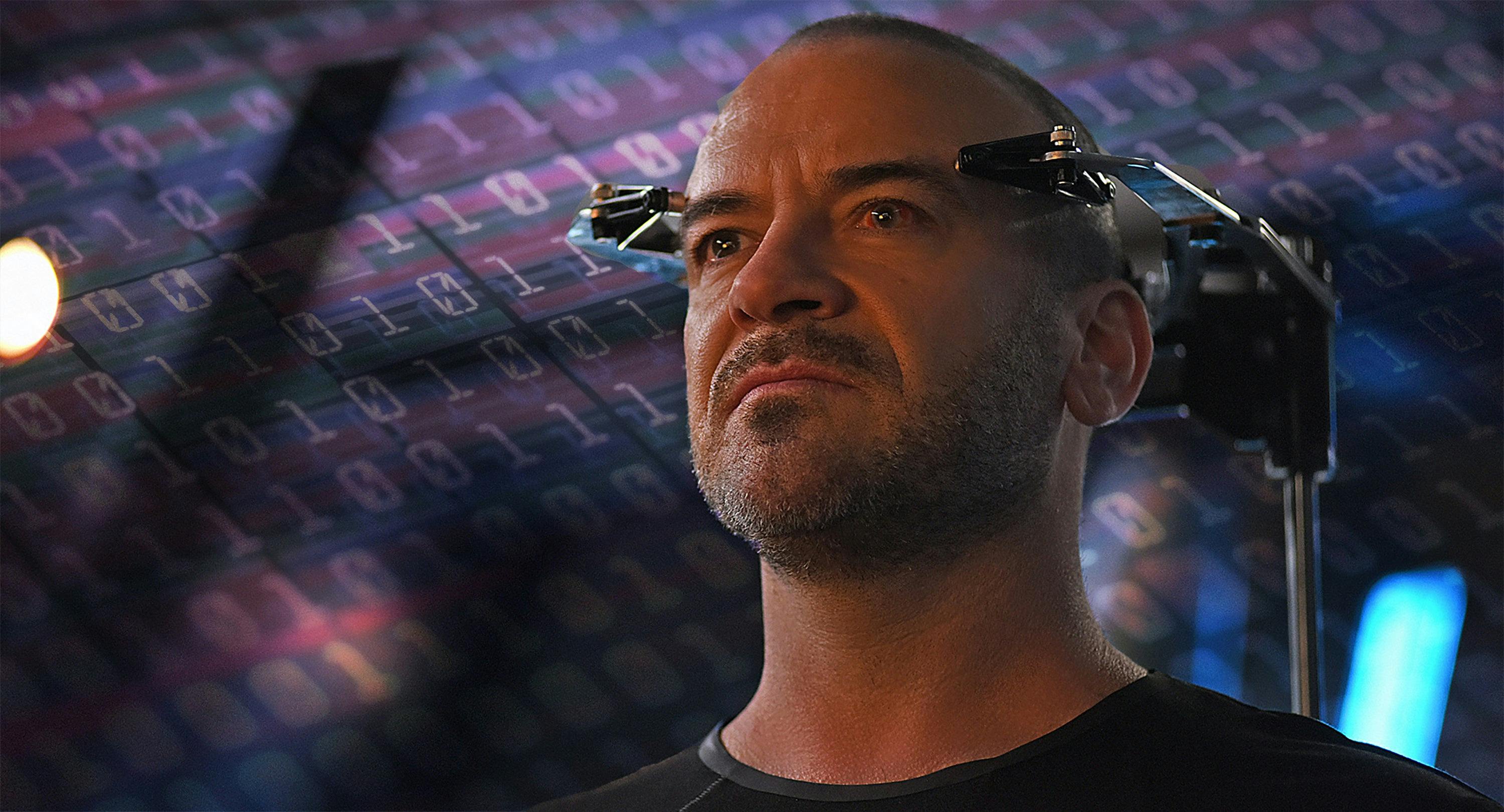

In the second season of Star Trek: Discovery, a sophisticated computer program known as Control ran amok, bent on fulfilling its mission in a way its human designers never intended. It took over computer systems and eventually starships and space stations. It also hacked into a human-robot hybrid and deployed swarms of nanobots for nefarious purposes. We’ve seen positive or misunderstood consequences of artificial intelligence gaining self-consciousness — like the nanites and exocomps in The Next Generation — but AI with a truly malicious intent always hovered in the background.

StarTrek.com

Back here in the 21st century, we have more practical AI-related concerns looming before us. For better or worse, AI will soon fill our lives, with computer algorithms running our cars and trains, and robots proliferating everywhere from grocery stores to factories. We must also consider increasingly autonomous military drones and missiles.

“Several decades is an almost incomprehensible timeline in terms of the rapidity with which the technology is changing, being understood, deployed and regulated,” says Adam Holland, a project manager at the Berkman Klein Center for Internet & Society at Harvard.

Holland points out that the biggest expansion of AI might be in smart devices, not just in our pockets but also throughout appliances and electronics in our homes, vehicles, and workplaces. Algorithms with friendlier names than Control, like Alexa and Siri, nonetheless also have massive amounts of our personal information accessible to them. Google, Facebook, Amazon, and Apple already know more about us than we might realize, and he argues that this panopticon of commercial surveillance opens the door to potentially creepy implications, with, say, hyper-targeted ads whether we want them or not and provisions in health insurance or employer’s contracts involving issues we’ve never told anyone about.

And every AI program has an error rate. No matter how much you train your algorithms and how well you prepare for unlikely scenarios, there’s always a chance for a malfunction or for something you hadn’t anticipated. For example, even if self-driving cars, trucks and buses work the way they’re supposed to 99% of the time, that remaining 1% of, say, hard-to-identify pedestrians or unforeseen terrains, could lead to deadly collision much more often than we want. Additionally, many programs have built-in biases, such as the ones found in algorithms used by police agencies for predicting crime in targeted neighborhoods, and for facial recognition and in the criminal justice system. Programs used for hiring and promotions also easily could come with gender- and race-based stereotypes built into them.

StarTrek.com

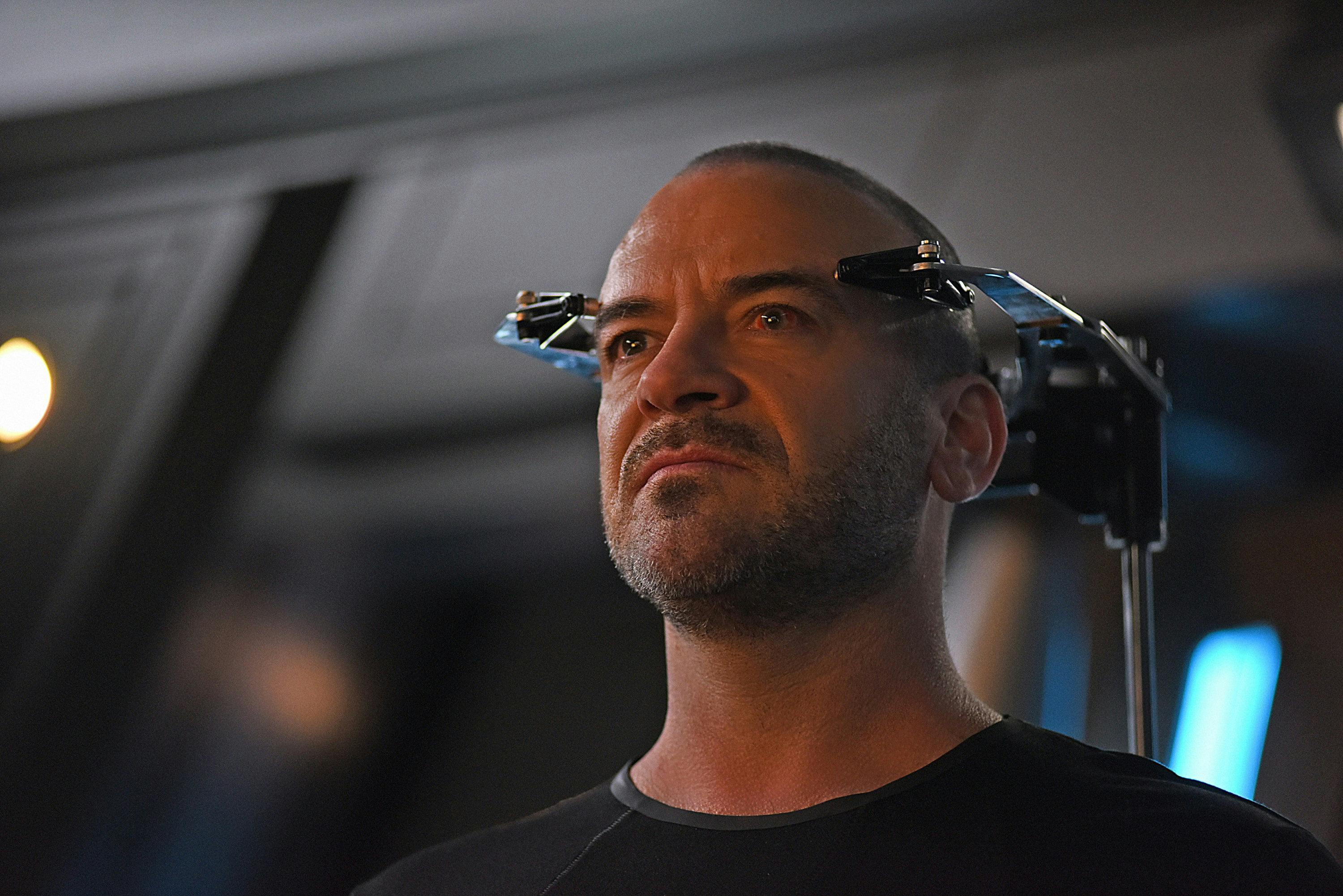

Most of these technologies could be hacked. Data, Voyager’s Doctor, and Airiam all got hacked in Star Trek episodes, and the brain implants or interfaces, such as those in development at Elon Musk’s Neuralink run the same risk of being exploited by hackers. Security experts have already worried about former Vice President Dick Cheney’s pacemaker somehow being hacked, and we’ve already seen “deep fake” YouTube and Instagram videos of former President Obama and Facebook CEO Mark Zuckerberg saying things they didn’t actually say — akin to the holograms of Admiral Patar used by Control to manipulate those who couldn’t tell the difference from the real thing.

Lethal autonomous weapons, like the ones used on Predator drones, picking targets far from a human behind a computer screen, could also pose deadly problems. “People are concerned that gradual automation could lead to larger swarms of drones and cyber-AI systems operating without humans in the loop or with a human less heavily involved. That could lead to escalation or increasing the stakes of a system failure,” says Miles Brundage, a policy research at the company OpenAI.

Missile defense systems and nuclear missiles have already been partly automated, and it’s conceivable that satellites armed with missiles or lasers (or phasers) could eventually become a reality, too. One mistaken weapon fired from a mostly AI-run defense system could be enough to spark a war. With weapons of mass destruction, the toll could be catastrophic.

Some science fiction writers think that AI going awry is likely to happen, and that the potential for disaster could explain why we pre-warp humans haven’t encountered advanced alien races yet: Maybe most civilizations don’t make it that far. At some point in the future, we could reach a tipping point called the “singularity,” where machine intelligence overtakes human intelligence. With superhuman technologies advancing exponentially and unchecked, this Pandora’s box of catastrophe wouldn’t be able to be closed. Even if there’s no nuclear war, we could end up with excessively powerful paperclip-making robots turning us all into paperclips or Amazon factory robots stuffing us all into boxes.

These problems all stem from the concept of broad superhuman intelligence, as opposed to narrow intelligence we see today. While Google’s search engine searches better than any person can do, and Ford’s and General Motors’s self-driving cars can (almost) drive better, they can’t really do anything else. “What scares people is this notion of broad and superhuman. It can do everything a human can do, but better. We basically have no idea as an AI community how to build a broad superhuman device, and I’m actually skeptical that we’ll ever be able to do it,” says Mark Riedl, director of Georgia Tech’s Entertainment Intelligence Lab.

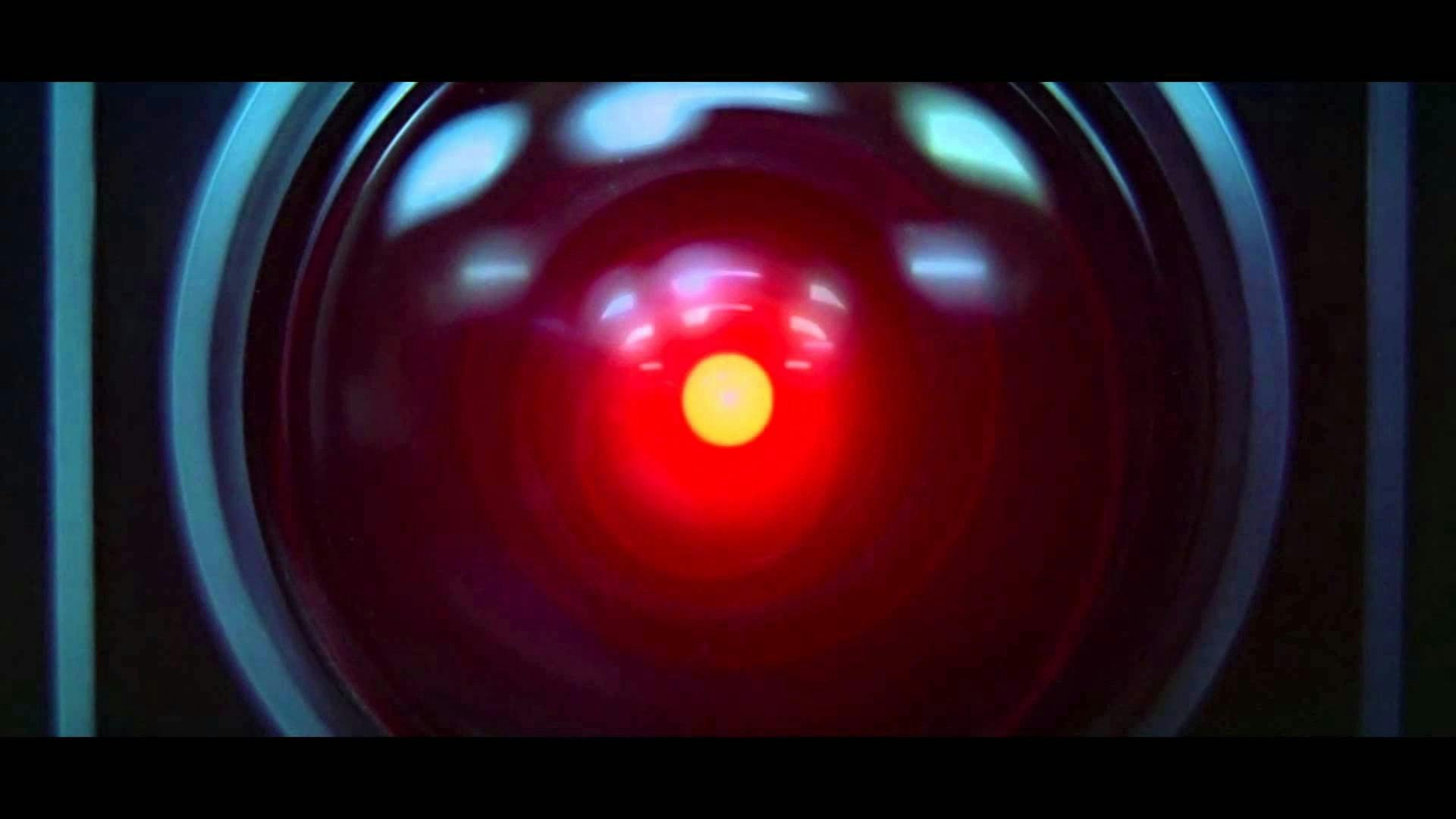

MGM

While Control on Discovery had broad superhuman intelligence, Riedl points out that maybe a more conceivable possibility in the near future would be like HAL in 2001: A Space Odyssey, whose creator gives it the wrong, imprecise goal, resulting in unintended effects. In another example, suppose someone in the future became ill and asked their hypothetical caregiver robot to go to the pharmacy and get some drug for them as soon as possible. That robot could interpret that instruction too urgently, knocking everyone down along the way, breaking into the store and stealing the medicine — not exactly what was intended.

Nevertheless, while AI technologies continue their rapid advance, people’s concerns about their ethical implications have grown at the same time, and scientists, policymakers, and journalists have drawn attention to them. “People are very on the ball, making sure that this gets done right, because they recognize the potential scope of things,” Holland says. “So, I’m at least cautiously optimistic.”

Ramin Skibba is an astrophysicst turned science writer and freelance journalist, and he's president of the San Diego Science Writers Association. You can find him on Twitter at @raminskibba, at raminskibba.net, and catch his newsletter Ramin's Space.